TM07 word2vec vs doc2vec#

Loading data#

!mkdir ./data

!wget -P ./data -N https://github.com/p4css/py4css/raw/main/data/sentiment.csv

mkdir: ./data: File exists

--2025-09-21 22:04:58-- https://github.com/p4css/py4css/raw/main/data/sentiment.csv

Resolving github.com (github.com)... 20.27.177.113

Connecting to github.com (github.com)|20.27.177.113|:443...

connected.

HTTP request sent, awaiting response...

404 Not Found

2025-09-21 22:04:59 ERROR 404: Not Found.

import pandas as pd

df = pd.read_csv('data/sentiment.csv')

df.head(5)

/Users/jirlong/opt/anaconda3/lib/python3.9/site-packages/pandas/core/computation/expressions.py:21: UserWarning: Pandas requires version '2.8.4' or newer of 'numexpr' (version '2.8.1' currently installed).

from pandas.core.computation.check import NUMEXPR_INSTALLED

/Users/jirlong/opt/anaconda3/lib/python3.9/site-packages/pandas/core/arrays/masked.py:60: UserWarning: Pandas requires version '1.3.6' or newer of 'bottleneck' (version '1.3.4' currently installed).

from pandas.core import (

| tag | text | |

|---|---|---|

| 0 | P | 店家很給力,快遞也是相當快,第三次光顧啦 |

| 1 | N | 這樣的配置用Vista系統還是有點卡。 指紋收集器。 沒送原裝滑鼠還需要自己買,不太好。 |

| 2 | P | 不錯,在同等檔次酒店中應該是值得推薦的! |

| 3 | N | 哎! 不會是蒙牛乾的吧 嚴懲真凶! |

| 4 | N | 空尤其是三立電視臺女主播做的序尤其無趣像是硬湊那麼多字 |

Tokenization#

import jieba

df['token_text'] = df['text'].apply(lambda x:list(jieba.cut(x)))

df.head()

Building prefix dict from the default dictionary ...

Loading model from cache /var/folders/j3/p4x0mssx55nd8dn903h5wdb00000gn/T/jieba.cache

Loading model cost 0.284 seconds.

Prefix dict has been built successfully.

| tag | text | token_text | |

|---|---|---|---|

| 0 | P | 店家很給力,快遞也是相當快,第三次光顧啦 | [店家, 很, 給力, ,, 快遞, 也, 是, 相當快, ,, 第三次, 光顧, 啦] |

| 1 | N | 這樣的配置用Vista系統還是有點卡。 指紋收集器。 沒送原裝滑鼠還需要自己買,不太好。 | [這樣, 的, 配置, 用, Vista, 系統, 還是, 有點, 卡, 。, , 指紋,... |

| 2 | P | 不錯,在同等檔次酒店中應該是值得推薦的! | [不錯, ,, 在, 同等, 檔次, 酒店, 中應, 該, 是, 值得, 推薦, 的, !] |

| 3 | N | 哎! 不會是蒙牛乾的吧 嚴懲真凶! | [哎, !, , 不會, 是, 蒙牛, 乾, 的, 吧, , 嚴懲, 真凶, !] |

| 4 | N | 空尤其是三立電視臺女主播做的序尤其無趣像是硬湊那麼多字 | [空, 尤其, 是, 三立, 電視, 臺, 女主播, 做, 的, 序, 尤其, 無趣, 像是... |

Training w2v#

from gensim.models import Word2Vec

w2v = Word2Vec(df['token_text'], min_count=1, vector_size=300, window=10, sg=0, workers=4)

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Input In [4], in <cell line: 1>()

----> 1 from gensim.models import Word2Vec

2 w2v = Word2Vec(df['token_text'], min_count=1, vector_size=300, window=10, sg=0, workers=4)

File ~/opt/anaconda3/lib/python3.9/site-packages/gensim/__init__.py:11, in <module>

7 __version__ = '4.1.2'

9 import logging

---> 11 from gensim import parsing, corpora, matutils, interfaces, models, similarities, utils # noqa:F401

14 logger = logging.getLogger('gensim')

15 if not logger.handlers: # To ensure reload() doesn't add another one

File ~/opt/anaconda3/lib/python3.9/site-packages/gensim/corpora/__init__.py:6, in <module>

1 """

2 This package contains implementations of various streaming corpus I/O format.

3 """

5 # bring corpus classes directly into package namespace, to save some typing

----> 6 from .indexedcorpus import IndexedCorpus # noqa:F401 must appear before the other classes

8 from .mmcorpus import MmCorpus # noqa:F401

9 from .bleicorpus import BleiCorpus # noqa:F401

File ~/opt/anaconda3/lib/python3.9/site-packages/gensim/corpora/indexedcorpus.py:14, in <module>

10 import logging

12 import numpy

---> 14 from gensim import interfaces, utils

16 logger = logging.getLogger(__name__)

19 class IndexedCorpus(interfaces.CorpusABC):

File ~/opt/anaconda3/lib/python3.9/site-packages/gensim/interfaces.py:19, in <module>

7 """Basic interfaces used across the whole Gensim package.

8

9 These interfaces are used for building corpora, model transformation and similarity queries.

(...)

14

15 """

17 import logging

---> 19 from gensim import utils, matutils

22 logger = logging.getLogger(__name__)

25 class CorpusABC(utils.SaveLoad):

File ~/opt/anaconda3/lib/python3.9/site-packages/gensim/matutils.py:22, in <module>

20 import scipy.linalg

21 from scipy.linalg.lapack import get_lapack_funcs

---> 22 from scipy.linalg.special_matrices import triu

23 from scipy.special import psi # gamma function utils

26 logger = logging.getLogger(__name__)

ImportError: cannot import name 'triu' from 'scipy.linalg.special_matrices' (/Users/jirlong/opt/anaconda3/lib/python3.9/site-packages/scipy/linalg/special_matrices.py)

Representsd by w2v#

import numpy as np

all_list = []

for i, tokens in enumerate(df["token_text"]):

temp_w2v = np.zeros(300)

for tok in tokens:

temp_w2v += w2v.wv[tok]

all_list.append(temp_w2v)

X = np.array(all_list)

Training doc2vec#

from gensim.models.doc2vec import Doc2Vec, TaggedDocument

Represented by TaggedDocument#

tagged = [TaggedDocument(words=tokens, tags=[i])

for i, tokens in enumerate(df["token_text"])]

Training model#

d2v = Doc2Vec(tagged, vector_size=100, alpha=0.025, window=5,

min_alpha=0.00025, min_count=5, dm=1)

# dm=1 : ‘distributed memory’ (PV-DM) ;

# dm=0 : ‘distributed bag of words’ (PV-DBOW)

d2v.train(tagged, total_examples=d2v.corpus_count, epochs=20)

Represented by d2v#

import numpy as np

all_list = []

for i, tokens in enumerate(df["token_text"]):

all_list.append(d2v.infer_vector(tokens))

X = np.array(all_list)

X.shape

(6388, 100)

Plotting#

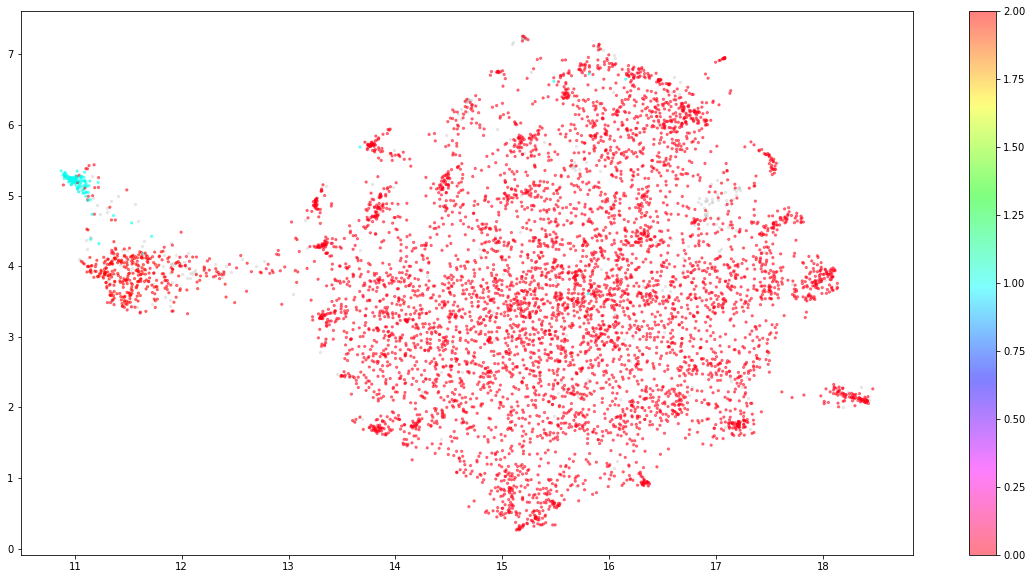

Reduced by umap#

# !pip install umap-learn

import umap

umap_embeddings = umap.UMAP(n_neighbors=15,

n_components=5,

metric='cosine').fit_transform(X)

Clustering by HDBSCAN#

# !pip install hdbscan

import hdbscan

from collections import Counter

cluster = hdbscan.HDBSCAN(min_cluster_size= 50,

metric='euclidean',

cluster_selection_method='eom').fit(umap_embeddings)

df['cluster'] = list(cluster.labels_)

print(df.columns)

print(Counter(df['cluster']))

Index(['tag', 'text', 'token_text', 'cluster'], dtype='object')

Counter({0: 5635, 2: 329, -1: 309, 1: 115})

Plotting#

import matplotlib.pyplot as plt

umap_data = umap.UMAP(n_neighbors=15, n_components=2, min_dist=0.0, metric='cosine').fit_transform(X)

result = pd.DataFrame(umap_data, columns=['x', 'y'])

result['labels'] = cluster.labels_

fig, ax = plt.subplots(figsize=(20, 10))

outliers = result.loc[result.labels == -1, :]

clustered = result.loc[result.labels != -1, :]

plt.scatter(outliers.x, outliers.y, color='#BDBDBD', s=5, alpha=0.3)

plt.scatter(clustered.x, clustered.y, c=clustered.labels, s=5, alpha=0.5, cmap='hsv_r')

plt.colorbar()

<matplotlib.colorbar.Colorbar at 0x286cfbd30>